Safe Phrases: Stay safe against AI voice cloning

Safe Phrases: Stay safe against AI voice cloning

It only takes three seconds of audio content for AI to clone someone’s voice. And one call from a scammer to trick you into thinking a ‘friend’ needs money.

In support of the Home Office’s ‘Stop! Think Fraud’ campaign, we’re working to protect you from AI voice scams through one simple solution: Safe Phrases.

Anyone can fall for an AI voice clone, even James Nesbitt

By playing this video you agree to YouTube's use of cookies. This use may include analytics, personalisation and ads.

What’s a Safe Phrase and how can you use it?

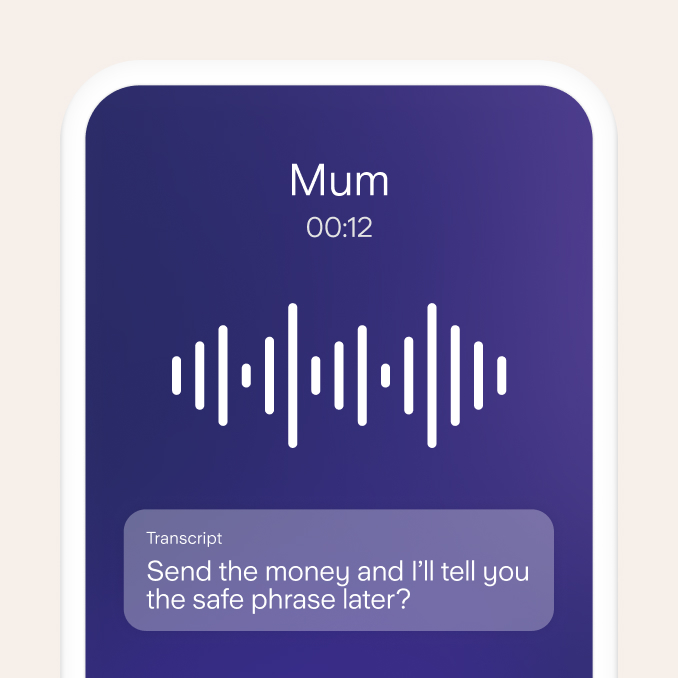

If ‘mum’ calls asking for money – would you really question if it’s her? It sounds like her but something’s out of character. This is where a Safe Phrase would come in handy, as this could be an AI voice cloning scam.

A Safe Phrase is a previously agreed phrase that you and your inner circle can use to verify if you’re truly speaking to them.

It can be anything, as long as it’s:

Simple yet random

Easy to remember

Different from your other passwords

Shared with friends and family in person

Just be sure to change it every now and again, and immediately if you think it’s no longer private.

How to remember your Safe Phrase

Keep it short and simple

If you must, write it on paper and store it safely (don’t save it anywhere digitally)

Create a mnemonic for the initials of each word

If the person you’re speaking to can’t tell you the Safe Phrase:

End the call and do not make any payments.

Make contact on another platform to see if they can give you the Safe Phrase there. And to confirm if it was them.

Tune in to any identifiable vocal tics or cues you know your friends or family have to help you tell if they sound different than normal.

Remember, scammers will often use a method called spoofing, which is when they make a call or text look like it’s coming from someone you know.

What else can you do to stay safe?

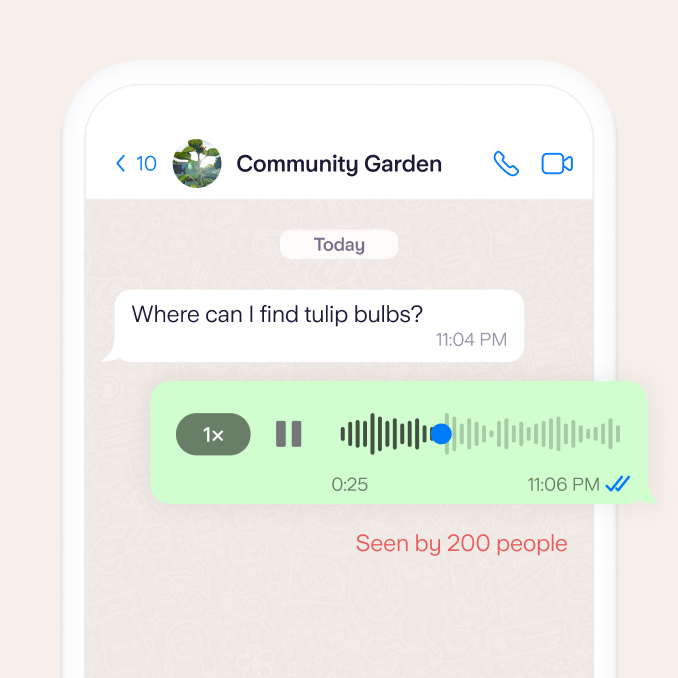

To stop AI voice cloning from the start, you need to make it difficult for scammers to access clips of your voice. They only need three seconds of audio content to make a clone.

Have a think about where you share your voice:

Is it shared publicly?

Are your social media accounts public or private?

Do you send voice notes on public group chats?

2.9 million

cases of confirmed fraud were reported by UK Finance members in 2022.

Source: Annual Fraud Report 2023

28% of Brits

believe they’ve been targeted by an AI voice cloning scam in the past year alone.

Source: Mortar Research 2024

Think you’ve been a victim of an AI voice cloning scam?

Call 159 to contact your bank immediately. For more information on protecting yourself against fraud and scams, head to our reporting fraud page.

Our commitment to you

We’ll never ask you to share your Safe Phrase with us. This should only be shared between you and any close friends or family.

To date, we’re pleased to share that AI voice cloning isn’t an issue our customers have reported. If you have any questions, you can contact us via the app 24/7 and a real human will be there to help.